Introducing Galexie: Efficiently Extract and Store Stellar Data

Author

Urvi Savla

Publishing date

Data

Developer Tool

Welcome to the latest installment in our series on the Composable Data Platform, the next generation data-access platform on Stellar. Today, we're excited to introduce the first component, Galexie, a lightweight application that extracts ledger data from the Stellar network. Read on to find out more!

One of the main challenges in working with Stellar network data is the tight coupling between the data extraction and transformation processes. Developers have limited tools available to read and save data directly from the Stellar network, which limits flexibility in their application design. To address this, we created Galexie as a dedicated extractor application, decoupling data ingestion from transformation. This approach offers greater versatility compared to hosting a monolithic service, like Horizon or Hubble.

The primary function of Galexie is to efficiently export the entire corpus of Stellar network data and store it in a cloud-based data lake. This creates a foundation of preprocessed data that can be rapidly accessed and transformed by various custom applications tailored to specific needs.

The data exported by Galexie supports tools like Hubble and Horizon in building derived data. Unlike the raw ledgers written to the History Archives, which is an unprocessed view of the network’s history, Galexie exports complete transaction metadata, offering precomputed data that is ready for use.

This design not only supports existing tools but also allows for more efficient data processing and opens up new possibilities for data analysis within the Stellar ecosystem. In the following sections, we'll explore Galexie's features, architecture, and potential applications.

What is Galexie?

Galaxie is a simple, lightweight application that bundles Stellar network data, processes it and writes it to an external data store. It includes the following capabilities:

- Data Extraction: Extract transaction metadata from the Stellar network.

- Compression: Compress the transaction metadata for optimized storage.

- Storage Options: Connect to a data store of your choice, beginning with Google Cloud Storage (GCS).

- Modes of Operation: Upload a fixed range of ledgers to cloud storage, or continuously stream new ledgers to cloud storage as they are written to the Stellar network.

Architecture Overview

Galexie is built around a few key design principles:

- Simplicity: Do just one thing, exporting ledger data efficiently, and do it well.

- Portability: Write data in a flat manner, so there is no need for complex indexing mechanisms in the storage system. This allows us to easily swap out one data storage option with another.

- Decentralization: Encourage builders to own and manage their own data.

- Extensibility: Make it easy to add support for new storage systems

- Resiliency: Make it possible for interrupted operations to resume cleanly after a restart. An operator should be able to run several instances for redundancy without conflict.

Non-goal: Galexie is not intended or designed for live ingestion or interacting with the Stellar network. The captive core is much better suited for live ingestion.

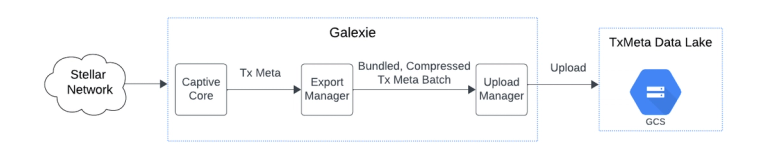

The following an architecture diagram depicting the various components within Galexie.

Data Format and Storage

Like Stellar Core, Galaxie emits transaction metadata in the standard XDR format. This format is compatible with existing systems, like Horizon and Hubble, which allows developers to effortlessly swap the XDR data source of their application backends.

We decided to support storing multiple ledgers in each exported file. This reduces the number of files written to a data store and makes it significantly more efficient to download large ranges of data. We found during experimentation that bulk downloading data for the same ledger range is twice as fast when files contain a bundle of 64 ledgers compared with files containing a single ledger.

All uploaded files are compressed using Zstandard as the default compression algorithm to save upload and download bandwidth as well as storage space.

File and Directory Structure

To allow further organization of data, we added support for partitioning the data via a directory structure. The number of ledgers per file and number of files per partition are both configurable.

We decided to encode additional information into the file and directory names. These names act as a sorting token and eliminate the need for separate indexing. The filename format includes two components:

- Each directory name contains the first and last ledger numbers contained within the partition, file names contain the start and end ledger numbers contained within the file.

- Additionally, directory names are prefixed with a sorting token, which is the hexadecimal representation of (uint_max - starting ledger of the partition). The file name is prefixed with the hexadecimal representation of (uint_max - starting ledger of the file). These tokens act as sorting identifiers.

This naming scheme ensures that files and directories are sorted in descending order, with the most recent ledgers appearing first, optimizing the retrieval for the latest ledgers.

For example, using 64,000 files per directory and 64 ledgers per file, the directory and file containing ledgers 64065 to 64128 would be named:

- Directory name: FFFF05FE-64001-128000/

- File name: 0xFFFF05BE-64065-64128.xdr.gz

Enhancing Efficiency with Galexie

Galexie’s data store approach significantly enhances the operational efficiency and scalability of Stellar tools. Instead of relying on Captive Core to generate transaction metadata, these tools can now access precomputed transaction metadata from the datastore. This approach improves data retrieval speed by an order of magnitude!

In addition, by removing the dependency on Captive Core which is a compute-heavy component, Galaxie lowers hardware requirements and costs.

Here are two examples demonstrating the effectiveness of using this data store approach.

Example Use Cases:

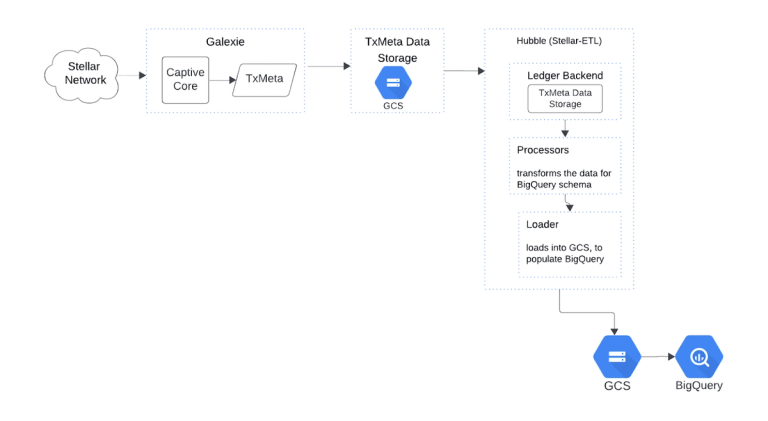

Hubble (Stellar-ETL)

Before Galexie, Hubble, the Stellar Analytics Platform, used Captive Core to ingest data from the Network. For batch processing, this was resource-intensive because Captive Core’s catch-up phase took longer than reading and processing that batch of data from the Network. By leveraging a data lake, Hubble is decoupled from Captive Core, meaning it can access transaction metadata directly from GCS without incurring the expensive startup cost of Captive Core. This significantly reduces execution time and overhead.

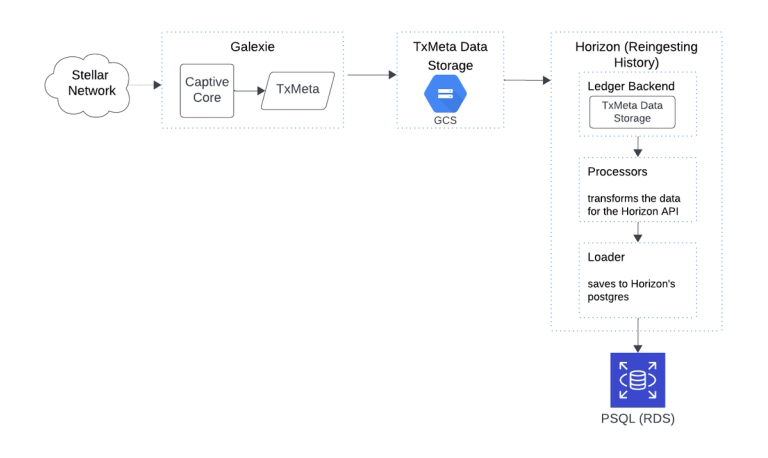

Horizon

Horizon, the Stellar API server, has the ability to reingest historical data within specified ledger ranges using an internal Captive Core instance. To manage large ledger ranges efficiently, Horizon can run multiple reingestion processes simultaneously, each accessing its own Captive Core. However, this approach is highly resource-intensive. Decoupling Horizon's reingestion from Captive Core enables direct data re-ingestion from the data lake, enhancing both speed and scalability.

Running your own Galexie

Running your own Galexie instance allows you to create a customized data lake with extracted raw ledger data. This approach offers a couple of benefits:

- Flexibility: Customize the schema and retention strategy of your data.

- Improved Data Accessibility: Gain direct access to data, eliminating dependency on Horizon or Stellar Core.

- Scalability: Store the entire history of the Stellar network in a cost-effective way, with the ability to backfill or reingest history as needed.

For detailed instructions on how to install and run the service, refer to the Admin Guide.

Performance

We created a data lake using Galexie and noted the following observations:

- Running a single instance of Galexie is recommended for point forward data ingestion only. Backfilling the full history of the network would take 150 days running a single instance.

- Running multiple parallel instances is recommended for filling a data lake.

- For example, we ran 40+ parallel instances of Galexie (on e2-standard2 compute engines) when creating data lake of pubnet data It took under 5 days with an estimated compute cost of $600 USD to backfill 10 years of data.

- The size of the data lake for the entire history of Stellar Pubnet is ~3TB.

- The operational cost of running Galexie to continuously export Network data is ~$160 USD per month. This includes $60 per month in compute and another $100 per month in storage costs.

Note: Time estimates related to backfilling a data lake depend on various factors such as the number of ledgers bundled in a single file, network latency, and bandwidth.

What’s Next

Future Development

Our goal at SDF is to advance truly decentralized applications and build smaller, reusable software components. Galexie aligns with this vision, playing an important role in building the Composable Data Platform. We aim to expand Galexie functionality by adding support for other object stores and integrating with new transformation tools.

How You Can Contribute

We welcome community contributions to support these future developments. Your involvement can help extend Galexie’s capabilities, add support for additional cloud storage solutions, and integrate new tools. For more details on how to get involved, refer to our Developer Guide.

What’s Coming Up?

In upcoming blog posts, we will focus on specific use cases, including refactoring Hubble and Horizon backends to use a data lake generated by Galexie. Stay tuned as we delve deeper into these applications and their benchmarks, and provide insights into how Galexie can enhance data handling and performance.

Feedback

If you have questions, suggestions, or feedback, join us on Discord in the #hubble and #horizon channels. Our team and community members are there to discuss and provide support.

More to Explore

Future Reading Materials

Article

• Molly Karcher

Composable Data Platform: A New Way to Access Data on Stellar

Developer Tool

Data

This article is the first in an expansive series on the Composable Data Platform, the next generation data-access platform on Stellar. The Composable…

Article

• Molly Karcher

SDF’s Horizon: Limiting Data to 1 year

Beginning August 1st, 2024, the Stellar Development Foundation's Mainnet instances of Horizon will provide one year of historical data, which will be…

The Newsletters

THE EMAIL YOU ACTUALLY WANT TO READ

Hear it from us first. Sign up to get the real-time scoop on Stellar ecosystem news, functionalities, and resources.