Blog Article

Why Quorums Matter and How Stellar Approaches Them

Author

Stellar Development Foundation

Publishing date

Stellar consensus protocol

Proof-of-agreement

Quorums

Today we released stellar-core v11.2, an upgrade that includes some crucial improvements to quorum set configuration and monitoring.

The basic idea: like all Federated Byzantine Agreement Systems, the Stellar Consensus Protocol relies on validators choosing good quorum sets in order to function properly. Prior to this upgrade, that was a difficult thing to do: validators configured their quorum sets manually, and there wasn’t an easy way for them to check those configurations to see if they created necessary quorum intersection.

To make things easier for validators, we engineered two solutions:

- Automatic quorum configuration

- A quorum intersection checker, which implements an algorithm developed by Łukasz Lachowski

These are big changes, and we wanted to let you know what they are, why we made them, and why they’re important. In order to get there, let’s start with a little background…

Why Quorum Configuration is Important

Traditional Byzantine Agreement Systems (BAS)

A distributed system consisting of individual nodes requires a mechanism that allows those nodes to reach agreement. Byzantine Agreement is one such mechanism.

BAS can tolerate some number f of byzantine nodes. In practice, for the system to tolerate f faulty nodes, it must have at least 2f +1 honest nodes, or 3f + 1 nodes in total.

Let’s illustrate this with the classical Byzantine Generals Problem. Faced with an opposing army, a general and his lieutenants must agree on whether to attack or retreat. However, any lieutenant (or even a general) can be a traitor. A general sends orders to attack or retreat to his lieutenants such that (1) all loyal lieutenants obey his orders, and (2) if the general is loyal, then every loyal lieutenant obeys the general’s order.

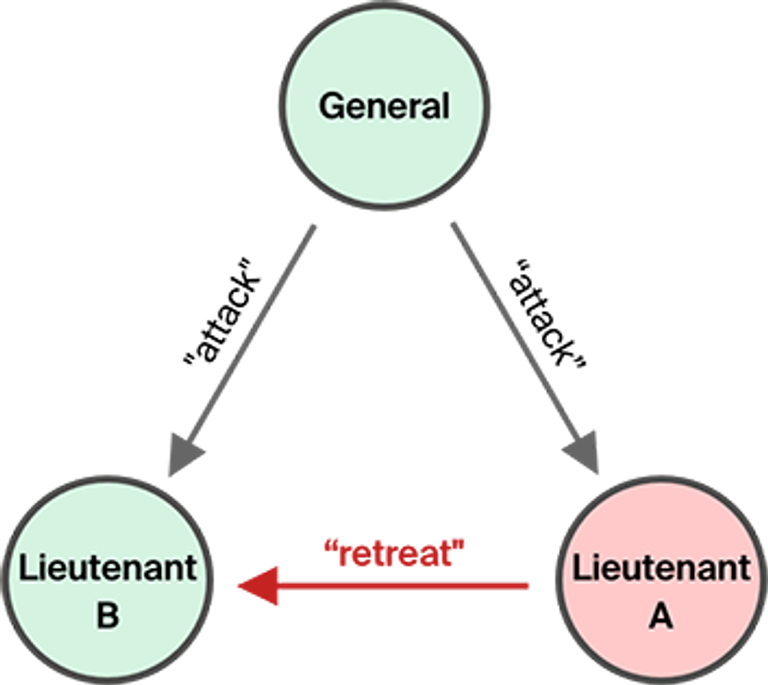

Consider the case with one general and two lieutenants. Suppose one of the lieutenants (A) is a traitor: she receives an order to attack, but tells another lieutenant to retreat. The other lieutenant (B) receives contradicting orders (to attack from the general, and to retreat from lieutenant A), and has no way to verify who is loyal and who is a traitor.

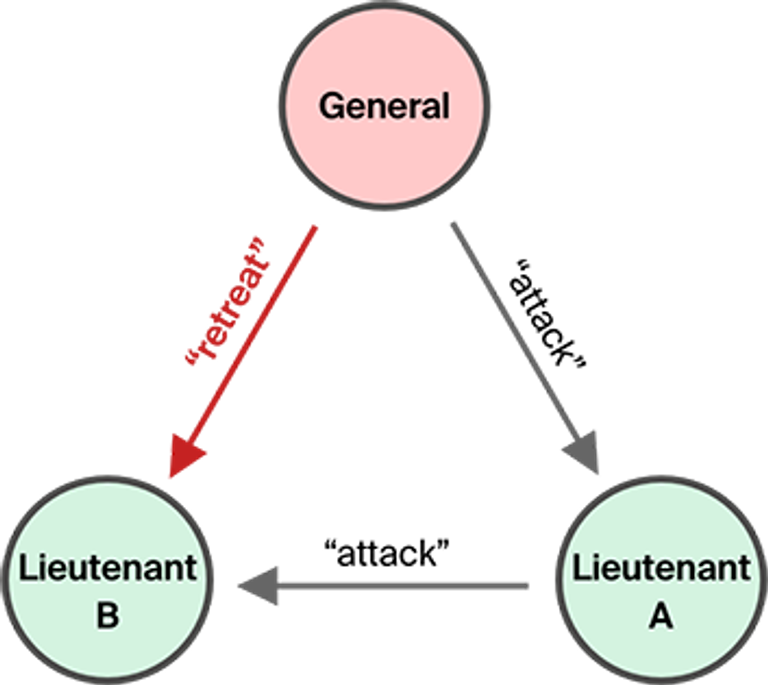

On the other hand, suppose both lieutenants are loyal, but the general is a traitor, and sends an order to attack to lieutenant A and retreat to lieutenant B. The loyal lieutenant A sends “attack” to lieutenant B, but lieutenant B got “retreat” from the general, and thus can’t proceed. This system can’t tolerate a single traitor to make a decision.

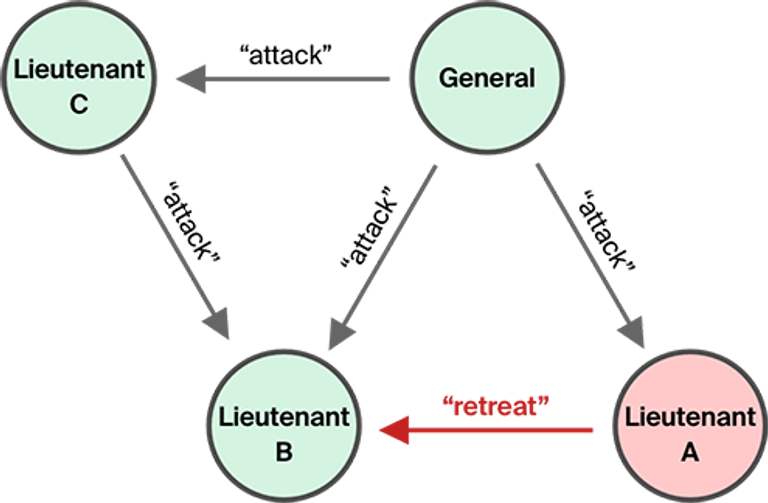

Now let’s add another lieutenant (C). Suppose the same malicious lieutenant A mentioned above received an attack order again, but tells lieutenant B to retreat. Lieutenant B receives two orders to attack (from the general and from C), and one faulty order to retreat. Lieutenant B can now identify a traitor and execute the correct order. Such a system tolerates one failure.

Federated Byzantine Agreement

In BAS, we simply assume that we need agreement of more than two thirds of the whole system. Each participant is equally trusted. But consider the real world: what if you have a network of nodes that represent different organizations: banks, non-profits, remittance providers, coffee merchants, etc.? You do not necessarily care if the coffee merchant is byzantine. What if you only want to trust and depend on a smaller group of nodes to achieve consensus?

This is exactly what an FBA system allows you to do: it lets you configure your own quorum slice to tell the world who you trust.

The advantage of the flexible trust an FBA provides is that it makes things harder for a malicious actor to do bad things: even if he adds a million evil nodes to the network, they won’t have any effect unless he convinces a critical mass of nodes to include them in their quorum slices. He’d have to convince a lot of people to trust a lot of nodes. On the other hand, a traditional BA system would be compromised, since each node trusts every other node on the network.

It is worth noting that a BA system is actually an FBA system too! In BA, every node has the same configuration, where every set of more than two thirds of the entire system is a quorum slice, so it is redundant to explicitly configure quorum slices. On the other hand, FBA generalizes BA, letting a node pick its own slices.

Why Quorum Configuration is Hard

With great power comes great responsibility. The flexibility of user-defined quorum configurations allows truly open network membership (anybody can join) and decentralized control (no central authority dictates whose vote is required for consensus). However, freedom to configure your own quorum slices may result in invalid network configurations such that:

- Parts of the network may depend on unreliable (not available) nodes, making it easier to stop making consensus progress. The network halts.

- Parts of the network might not know about each other, producing different consensus results. The network forks.

In order to prevent halts and forks, a network configuration needs to balance properties of liveness and safety.

Liveness depends on the reliability of nodes in a quorum: if too many nodes go down, a quorum stops making progress. Safety relies on quorum intersection: if quorum sets don’t overlap, nodes end up with different consensus results.

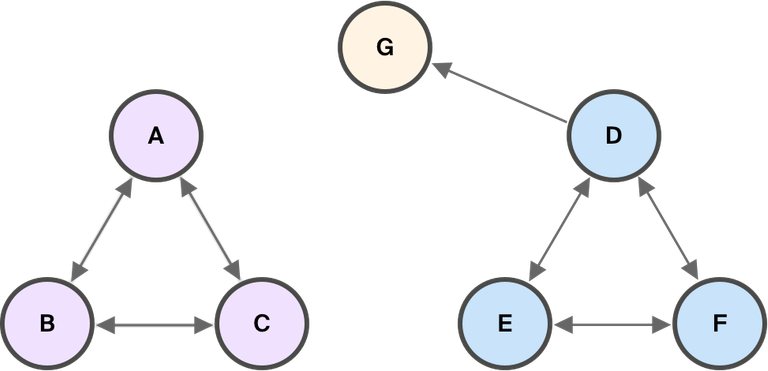

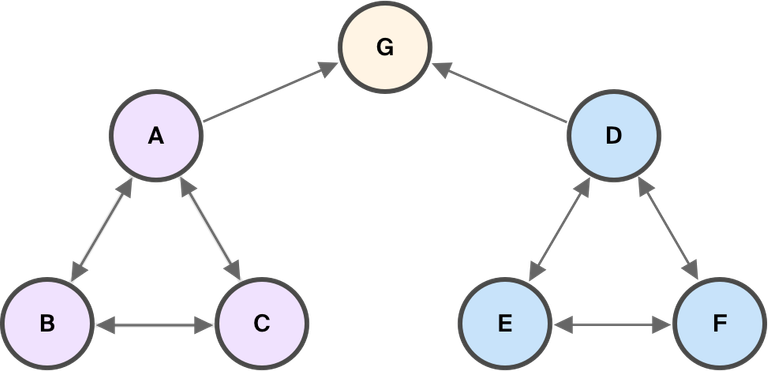

The diagram below shows two different configurations that affect quorum intersection (assume G is not byzantine).

Note that while quorum intersection is necessary for safety, it’s not enough to guarantee safety: if node G becomes byzantine and decides to stop sending messages, the network may still fork.

You Can’t Always Get What You Want

The Rolling Stones probably weren’t referring to distributed systems, but they summarized the problem nicely. It is impossible to achieve both safety and liveness in a system prone to network partitions (if you are no stranger to distributed systems, you might recognize that the CAP theorem is an example of this fundamental fact).

Although in the context of FBA systems liveness varies across quorums (some quorums might make progress, while others halt due to unreliable nodes), there is still a tradeoff between safety and liveness, influenced by the overall quorum configuration.

To illustrate this, consider a network configured with two quorums (each able to tolerate one failure) that overlap by a single node. If that node becomes unavailable, both quorums may agree on different values, jeopardizing safety (but both sustaining liveness). On the other hand, suppose two quorums are configured to overlap by 50% of the nodes. If those overlapping nodes behave unreliably, both quorums might lose liveness, prioritizing safety.

How We Make Quorum Configuration Easier

Imagine trying to keep all of that in mind as you set up a Stellar-network validator and manually construct a quorum set. You want to balance safety and liveness and make choices that ensure quorum intersection, but it’s easy to make mistakes, and it’s hard to keep a vigilant watch on the nodes in your quorum set. If you miscalculate thresholds for your node’s quorum slices, which is an easy thing to do, you jeopardize quorum intersection, and therefore, network safety. If you trust too many unreliable nodes, you may hamper liveness and prevent the network from making further progress. We realize that quorum configuration is hard, and so we’ve started engineering solutions to make it easier.

Automatic Quorum Configuration

As a first step towards making quorum configuration simpler and more intuitive, we are automating part of the process. Now, stellar-core groups validators run by the same organization, constructs a hierarchy based on validator quality, and sets thresholds based on best practices. Here’s how it works:

Validator Grouping

Redundancy within an organization on the Stellar network is a good practice, which is why we recommend running at least three validators for high availability. Previously, stellar-core did not distinguish between validators and organizations, making it the user’s responsibility to configure slices and thresholds correctly. Consider a simple example of a quorum slice configuration:

{A, B, C, D, E, F, G} — threshold 67%

Seems reasonable. There are seven nodes, and five are needed to agree to reach consensus. However, what if instead of node names, we simply state the organization name that runs it?

{SDF1, SDF2, SDF3, SDF4, SDF5, LOBSTR, SATOSHIPAY} — threshold 67%

The problem is more visible now. If LOBSTR and SATOSHIPAY do not agree with what SDF says, SDF will proceed anyway, since its own nodes agree, meeting the threshold.

The issue here is that the organizations are not weighted correctly because some of them happen to run more nodes than others. From the consensus point of view, we care about an organization as a whole, not its individual validators.

To reflect that concern, stellar-core now allows you to specify HOMEDOMAIN for each validator. Nodes run by an organization have the same domain, and are automatically grouped together with a simple majority (51%) threshold. If HOMEDOMAIN is specified correctly for each node, the above configuration automatically becomes:

{{SDF1, SDF2, SDF3, SDF4, SDF5 — threshold 51%}, LOBSTR, SATOSHIPAY} — threshold 67%

Validator Quality

Validators now specify the quality of nodes in their quorum slices. Quality is somewhat subjective, but the rating system works best if you follow the guidelines and use common sense when assigning values.

There are three categories: HIGH, MEDIUM, LOW. A high quality validator is reliable (part of an organization that runs at least three validators), publishes a history archive, and has a track record of good behavior on the network.

A medium quality validator might be less reliable or less known to the network. It is a candidate to become a high-quality validator if it remains reliable, starts publishing an archive, and gains the respect of the core network.

A low quality validator isn’t known or trusted by the network yet. If it proves itself reliable and trustworthy over time, it can be promoted to a higher quality.

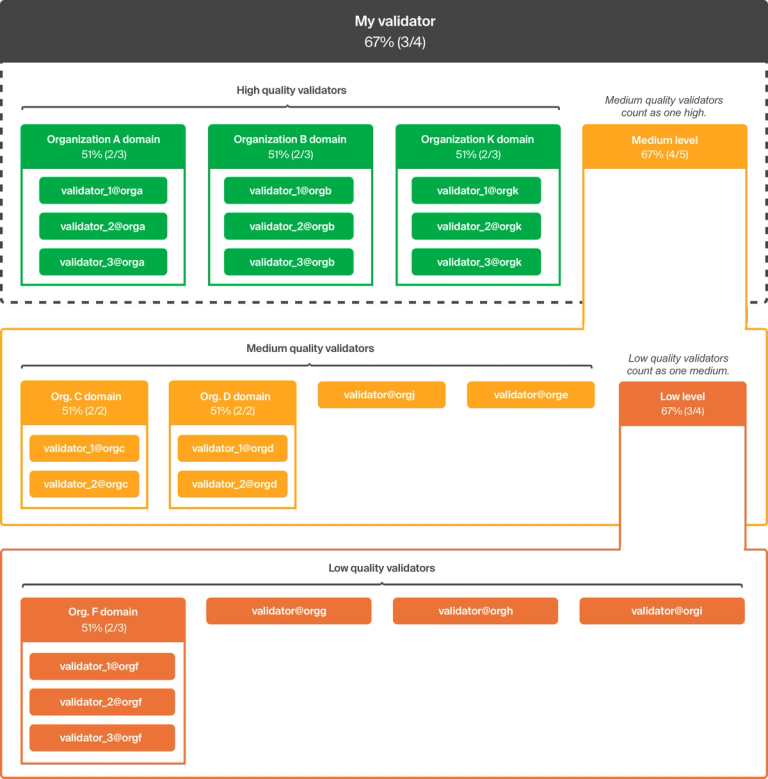

The following diagram shows how the nodes are grouped after you specify a quality rating for each node in your quorum slice:

Structuring nodes this way helps liveness, as high quality nodes are likelier to be available (due to stricter reliability requirements). In case a high-quality node is byzantine or goes down, the medium quality group acts as a back-up to ensure consensus. In other words, the agreement among all medium quality validators is equal to one vote in the high-quality tier. Similarly, the agreement among all low quality validators is equal to one vote in the medium-quality tier.

Checking Quorum Intersection

Recall that quorum intersection is essential for network safety. Continuous quorum monitoring is a crucial part of identifying misconfigurations. It turns out, however, that identifying lack of quorum intersection is hard. In fact, it’s an NP-complete problem: a solution cannot be found efficiently (in polynomial time), but a given solution can be verified efficiently. It is difficult, because for a network of N nodes, we must iterate through 2ᴺ possible subsets and check for absence of quorum intersection.

Despite that difficulty, Stellar-core v11.2 offers a mechanism to check for quorum intersection relatively quickly by recognizing that some subsets don’t actually need to be checked. By exploring all sets in a particular order, we stop checking unnecessary sets as soon as possible. For more information on the algorithm, check out this paper: https://arxiv.org/abs/1902.06493

The feature is enabled via the new configuration QUORUM_INTERSECTION_CHECKER, and is triggered in the background when a node’s quorum set changes. If quorum intersection is not found, a warning is emitted, and the information about the split is added to the output of the info endpoint.

The Future Is Exciting

We are committed to our mission to promote global financial inclusion. We believe that the challenges in front of us require a methodical, incremental approach. The changes we are introducing in this release are the first step toward safer and stronger quorums, and we couldn’t be more excited to continue improving the experience on Stellar!

You can read the full release notes here.